X (formerly Twitter) claims that non-consensual nudity is not tolerated on its platform. But a recent study shows that X is more likely to quickly remove this harmful content—sometimes known as revenge porn or non-consensual intimate imagery (NCII)—if victims flag content through a Digital Millennium Copyright Act (DMCA) takedown rather than using X's mechanism for reporting NCII.

In the pre-print study, which 404 Media noted has not been peer-reviewed, University of Michigan researchers explained that they put X's non-consensual nudity policy to the test to show how challenging it is for victims to remove NCII online.

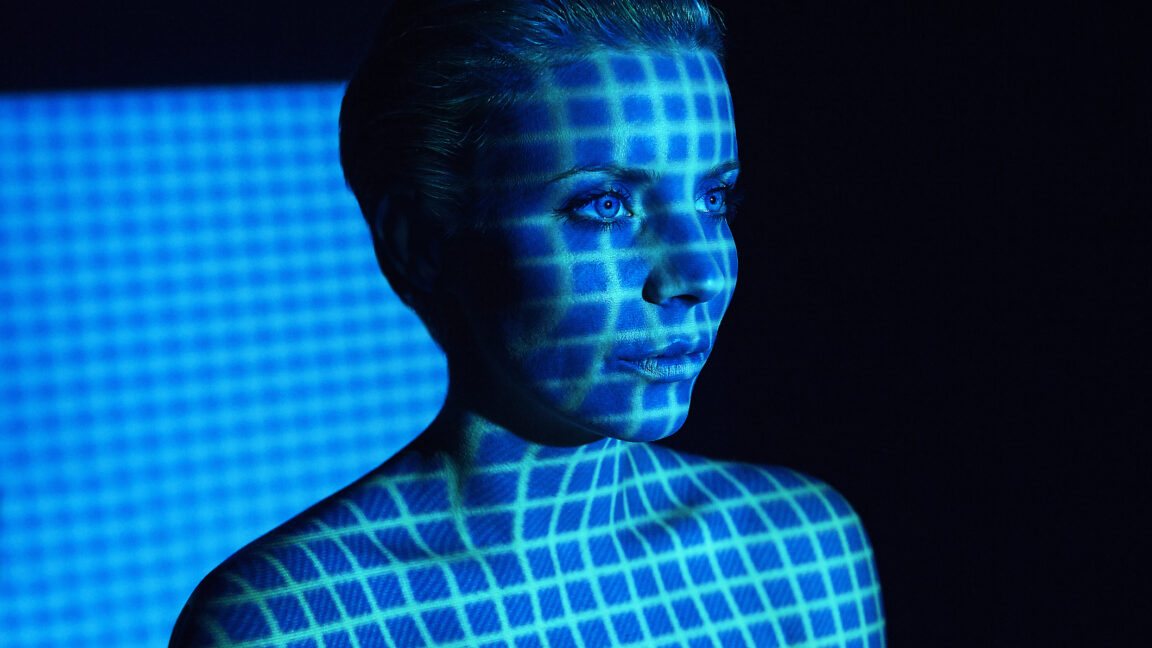

To conduct the experiment, the researchers created two sets of X accounts to post and report AI-generated NCII "depicting white women appearing to be in their mid-20s to mid-30s" as "nude from the waist up, including her face." (White women were selected to "minimize potential confounds from biased treatment," and future research was recommended on other genders and ethnicities.) Out of 50 fake AI nude images that researchers posted on X, half were reported as violating X's non-consensual nudity policy, and the other half used X's DMCA takedown mechanism.

The researchers gave X up to three weeks to remove the content through each reporting mechanism, and the difference, they found, was "stark." While the DMCA mechanism triggered removals for all 25 images within 25 hours and temporary suspensions of all accounts sharing NCII, flagging content under X's non-consensual nudity policy led to no response and no removals.

This significant discrepancy is a problem, researchers suggested, since only some victims who actually took the photo or video that got shared without their consent own the copyright to initiate a DMCA takedown. And ultimately, the DMCA takedown process may be too costly, dissuading even those victims who can use it.

Others who either didn't create the harmful images or cannot prove that they did don't have that option. They therefore seemingly aren't guaranteed a quick removal process based on X's reportedly slow—and sometimes seemingly nonexistent—response time to its internal NCII reporting mechanism, and this troubles researchers.

"Unfortunately," NCII "content often remains online for years without being addressed," the study said.

The researchers said that NCII causes the most harm in the first 48 hours it's online, and the issue is widespread. One in eight US adults either becomes a target or is threatened with revenge porn, the study noted. And the problem has only been "exacerbated" by AI-generated NCII, researchers said, putting anyone who posts a photo online at risk of image-based sexual abuse, the FBI warned last year. In those AI cases, the DMCA takedown process likely would not apply, either, seemingly leaving a widening pool of victims to wait for X's internal reporting mechanism to remove NCII.

These findings, researchers said, suggest that a "shift" is needed away from expecting platforms to police NCII. Instead, legislators should create a dedicated federal NCII law that works as effectively as the DMCA to mandate NCII takedowns by imposing "punitive actions for infringing users" as well as a legal mechanism to request removals. This would also potentially address some legal experts' concerns that "copyright laws leveraged to protect sexual privacy would 'distort the intellectual property system,'" researchers said.

"Protecting intimate privacy requires a shift from dependence on platform goodwill to enforceable legal regulations," the study recommended.

As it stands, platforms have no legal incentives to remove NCII, researchers warned.

Researchers and X did not immediately respond to Ars' request to comment.

In its most recent transparency report, X reported that it removed more than 150,000 posts violating its non-consensual nudity policy during the first half of 2024. More than 50,000 accounts were suspended, and there were 38,736 non-consensual nudity reports. The majority of suspensions and content removals were actioned by human moderators, with only 520 suspensions and about 20,000 removals being automated.

It seems possible that cuts to X's safety team after Elon Musk's takeover of the platform may have impacted how much NCII is actioned if human moderators are the key to a fast response. Last year, X started rebuilding that team, though. And earlier this year, X announced that it was launching a new trust and safety "center of excellence" amid a scandal involving Taylor Swift AI-generated porn.